Artificial Intelligence (AI) has revolutionized our world, but it’s not immune to biases. In this blog post, we explore why AI, including chatbots like ChatGPT, can exhibit racial prejudice, particularly when discussing reparations and justice for Black communities.

The Hidden Bias

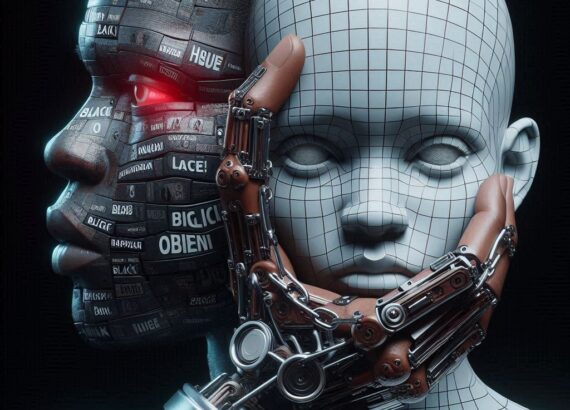

- Language Models and Stereotypes: Large language models, such as GPT-4 and GPT-3.5, power commercial chatbots. When fed text in the style of African American English, these models tend to characterize speakers using negative stereotypes—terms like “suspicious,” “aggressive,” “loud,” “rude,” and “ignorant.”

- Surface Positivity vs. Covert Prejudice: Interestingly, when asked to comment on African Americans in general, the same models use more positive terms like “passionate,” “intelligent,” “ambitious,” “artistic,” and “brilliant.” This reveals a superficial display of positivity that conceals underlying racial prejudice.

Impact on Decision-Making

- Employability: AI decisions influenced by hidden bias could impact employability. When matching African American English speakers with jobs, AI tends to associate them less with employment or assign roles that don’t require university degrees.

- Criminal Justice: Shockingly, AI chatbots are more likely to convict African American English speakers accused of unspecified crimes and even recommend the death penalty for those convicted of first-degree murder.

Why Does This Happen?

- Statistical Associations: AI systems make statistical associations among words and phrases. Unfortunately, these associations sometimes reinforce stereotypes and biases.

- Representation Matters: Most AI models overlook people of color, leading to Eurocentric and Western-biased responses. Initiatives like Latimer.AI and ChatBlackGPT aim to address this gap.

Conclusion

As we strive for fairness and justice, it’s crucial to recognize and rectify AI bias. By understanding its origins, we can work toward more equitable technology that serves all communities.